KYLE BAILEY, HEAD OF TEACHING SCHOOL HUB, ARK TEACHING SCHOOL HUB, UK

ISABEL INSTONE, SECONDARY CURRICULUM LEAD, ARK TEACHER TRAINING, UK

LEANNE JHALLEY, EARLY CAREER FRAMEWORK LEAD, ARK TEACHING SCHOOL HUB, UK

NISHA KUMAR-CLARKE, NETWORK LEAD FOR PRIMARY PROFESSIONAL DEVELOPMENT, ARK, UK

Assessment can help facilitators and participants on programmes to make better decisions about what they know and what they don’t know. As teachers, we do this all the time with our students, using a combination of observations, working with students and reviewing student work.

At Ark Learning Institute, we run a range of teacher development programmes that span initial teacher trainingAbbreviated to ITT, the period of academic study and time in school leading to Qualified Teacher Status (QTS) (ITTInitial teacher training - the period of academic study and time in school leading to Qualified Teacher Status (QTS)), the early career framework (ECF) (DfEDepartment for Education - a ministerial department responsible for children’s services and education in England, 2019), National Professional Qualifications (NPQ) and a wide range of Ark-designed development programmes to help our staff to keep learning.

We aim to utilise a range of approaches in diagnosing prior knowledge, uncovering misconceptions and, where appropriate, using deliberate practice to better understand our participants and help them to better understand their own development.

Formative assessment is a journey that underpins a relationship between teachers and teacher educators – it is about both understanding the end goal and understanding of how to get there. Getting teachers to respond to questions or practise aspects of teaching can therefore only be one part of the assessment.

This article will explore how we can use principles of assessment to make best use of deliberate practice. First, we’ll explore both the need for deliberate practice and its challenges, as well as how we leverage it as a powerful tool, particularly for our internally run development programmes.

Then we’ll consider the universal challenges of language – that using success criteria and/or rubrics means that both participants and teacher educators alike struggle to make best use of assessment. This is due to tacit knowledge being an obstacle for all educators, particularly when dealing with abstract concepts and for those who are relative novices in their domain.

We’ll lastly turn to the role that constraints play in teacher education, and in particular how deliberate practice needs to be more effective through building better shared understanding by placing greater emphasis on showing what success looks like.

Deliberate practice: Bridging the ‘knowing–doing gap’

Over the course of any programme, we aim to bridge the theory–practice gap or the so-called ‘knowing–doing gap’ (Allsopp et al., 2006). In fact, the current DfE ‘golden thread’ programmes that we deliver notably include both ‘learn that’ and ‘learn how to’ statements in each of the frameworks.

For many of our internal programmes at Ark, we bridge the knowing–doing gap by utilising deliberate practice and the work of Paul Bambrick-Santoyo in Get Better Faster (2016); this approach aligns closely with the discussion in the article by Grossman et al. (2009) surrounding the need for teacher education to include representation, decomposition and approximation of practice when working with teachers.

An example in practice

On our ‘mentoring for impact’ sessions, we aim to equip experienced teachers with the knowledge and skills to effectively mentor and coach others, as well as refine their own classroom practice. There are nine sessions over nine months, with each session being between 90 and 120 minutes. In the final session, mentors complete a presentation on an area of the course that has had the most impact on their practice, using data to evidence the impact.

To be effective, mentors must be able to name what makes the strategy ‘sing’. It’s not enough to simply have a representation provided or to ‘see it’. Our mentors must be able to name the parts to be fluent in the strategy in order to coach effectively.

During our sessions on classroom climateThe social, emotional, intellectual and physical environment of a classroom strategies, coaches are given the opportunity to work with and build tools that allow them to coach for precision. They then have the opportunity to share examples of practice from their own settings, receive feedback, work collaboratively with colleagues and spar with colleagues and exemplars so that they feel confident in applying the basics. We believe that these sessions help to embody many of the recommendations about using deliberate practice seen within Deans for Impact’s (2016) ‘Practice with purpose’.

We see mentors being quite generic in their observations when the course begins, but these become specific quite quickly, particularly with the use of data-collection tools, which we use to push mentors to be more precise.

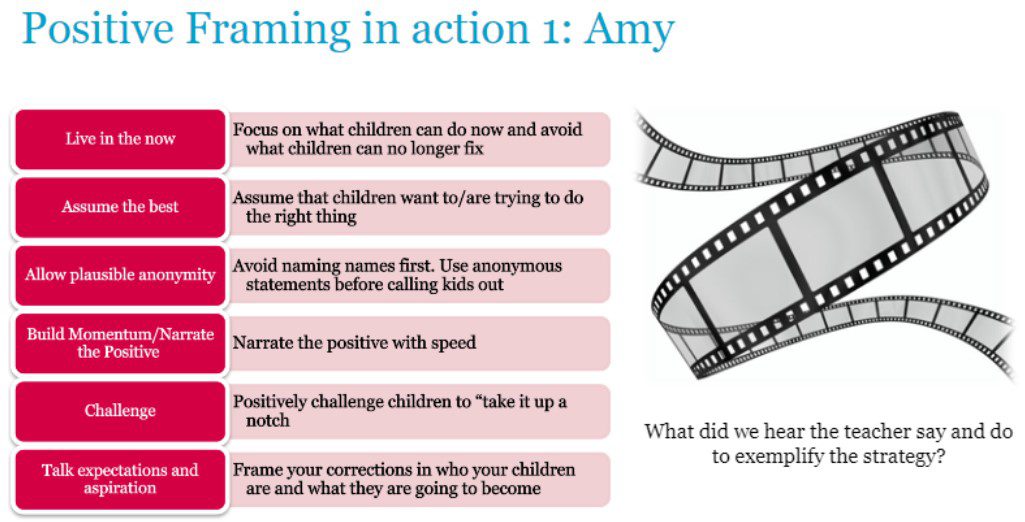

Figure 1 shows an example of the ‘do it’ part of the ‘see it, name it, do it’ model from a ‘positive framing’ session. Mentors are invited to watch and share specific examples. Once they’ve had a chance to share, we then share what we have observed, sometimes with an action step if it necessitates one.

Figure 1: An example of the ‘do it’ part of the ‘see it, name it, do it’ model from a ‘positive framing’ session

The difficulties of language: Building ‘tacit knowledge’

As you can see from Figure 1, there is quite a lot of tacit knowledge that might be built into our success criteria. This is why it is essential to:

- Clearly decompose the instructional practice before the session to ensure that we are as clear as possible when creating success criteria

- Live-model/think aloud when first introducing the success criteria

- Ensure multiple exemplifications so that all concepts are exemplified (in particular, concepts that are more abstract).

Providing multiple examples is particularly important when dealing with abstract concepts. In education, it is often the case that educators mistakenly use the same term to mean different things. Kelley (1927) calls this the ‘jingle’ fallacy, because the words may resemble one another but there is ‘no sufficient underlying factual similarity’ (Kelley, 1927, p. 64); in this example, it is quite possible that the teachers think that they both have the same understanding of ‘positively challenging’, but in fact have vastly different notions of the term.

As Andrade and Du (2005) mention, rubrics are not a replacement for good instruction. When using rubrics and their criteria, ‘peer assessments can be cruel or disorienting, and self-assessment can be misguided or delusional’ (p. 29), particularly due to a lack of understanding of these terms. Christodoulou (2015) states that rubrics ‘are full of prose descriptions of what quality is, but they will not actually help anyone who doesn’t already know what quality is to acquire it’; therefore, it is essential in these instances to ensure that multiple exemplifications are given.

Building better understanding through shared understanding by showing what good looks like

It might be tempting to race through the ‘see it, name it, do it’ cycle. While aspects like deliberate practice in education have been shown to be powerful (Manceniedo et al., 2023), deliberate practice has huge limitations when not carefully considered (Macnamara et al., 2014). We’re aware that there’s emerging evidence about the impact that modelling can have on teachers (particularly those in teacher training, as seen in Sims et al., 2023), which is further reinforced by ‘modelling’ constituting one of the ‘active ingredients’ in teacher CPD (continuous professional development) (Sims et al., 2022).

For example, as part of our initial teacher training programme, we might work with our novice teachers to work on ‘think alouds’, where the teacher makes their thinking processes explicit as they model how to write or solve a problem.

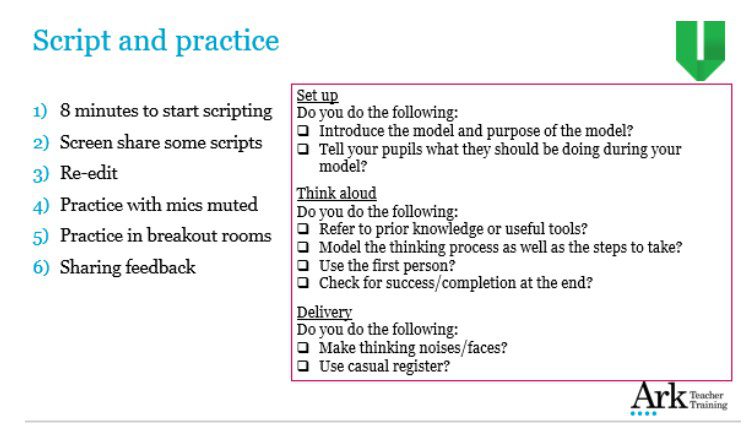

Figure 2: Planning, scripting and success criteria for a ‘think aloud’

In the example in Figure 2, trainees plan and script a ‘think aloud’ for an upcoming lesson before giving feedback to their peers, using the success criteria on the slide above. A key area for trainees to give feedback on is modelling the thinking process as well as the steps to take. This likely would be abstract to trainees and result in ineffective or unhelpful peer feedback if the session hadn’t started by sharing multiple examples and non-examples, using both videos and scripts.

The impact that time, lack of aggregation and amount of knowledge have on andragogical choices

The constraints of time on our programmes is perhaps the biggest reason why we emphasise building shared understanding through multiple well-chosen models. Unlike teaching, where at best you’ll see your pupils daily, teacher education programmes don’t necessarily have this luxury. Teacher educators also need to get information about teachers through assessment, but with the challenges of delivery (such as online sessions), less time with participants and therefore, potentially, less knowledge of participants’ prior knowledge, we need to use strategies that allow them to gather meaningful information. One way of doing this is through using strategies such as open-ended questions, such as ‘What is a mental model?’ The responses gathered from all participants give insights into their thinking and help the teacher educator to identify next steps, such as stamping key points, picking out trends and addressing misconceptions. Although these responses are useful, we know that it can be particularly difficult to accurately diagnose understanding without a larger aggregation of prior data (Coe, 2018).

Time can also be further constrained due to the sheer amount of knowledge that our educators need to know. Within the NPQ for Leading Teaching (NPQLT), there are 78 ‘learn that’ and 87 ‘learn how to’ statements (not including the entirety of the ECF, which forms the basis of Section 1 of the NPQLT framework, DfE, 2020). For most NPQ programmes, this means that there is less than an hour across the entire programme to cover each statement. As a result, it is paramount that teacher educators are careful about the weighting of all andragogical choices and have clarity about the extent to which the intended shared understanding has been achieved by all.

At Ark, all our programmes aim to assess our educators and support them in their own reflections on their current practices. Our emphasis on modelling and deconstructing tacit knowledge is an integral part of all our programmes. This is something that we are continually seeking to refine. In fact, this year, our ITT team is seeking to ensure that future trainees are even better supported by even more great examples – one way in which we’re doing this is by filming expert classroom practice across the network and deconstructing it. Regardless of programme, a key consideration is always how to best use the principles of formative assessment to support participants to consolidate what they know, and to identify next steps for their development.