JANE WAITE, RASPBERRY PI FOUNDATION, CAMBRIDGE AND RASPBERRY PI COMPUTING EDUCATION RESEARCH CENTRE, UNIVERSITY OF CAMBRIDGE, UK

Introduction

Resources related to AI and education are emerging, from international and country-specific policy guidance (e.g. Miao and Holmes, 2023; DfEDepartment for Education - a ministerial department responsible for children’s services and education in England, 2025), to suggested competency frameworks (e.g. Miao and Shiohira, 2024). When considering how AI technology may manifest in education, it helps to focus on three of the potential main areas of AI tool use in education: to improve teacher productivity, to teach about AI technology and to use AI tools to support the teaching of any subject.

This article focuses on emerging research themes related to teaching about AI, specifically introducing four key ideas: avoiding anthropomorphisation; introducing data-driven systems; taking into account Computational Thinking 2.0, and using SEAME (a simple framework for helping us to think about teaching about AI technology). These ideas are now being used to inform computer science education research (Waite et al., 2022, 2024) and were key design principles in the creation of the Raspberry Pi Foundation’s Experience AI resources, co-developed with Google DeepMind in 2023, which teach about AI and AI literacy to 11- to 14-year-olds (Raspberry Pi Foundation, co-developed with Google DeepMind, 2023).

Avoiding anthropomorphisation

The topic of AI is infused with anthropomorphic language. To anthropomorphise can be defined as ‘to show or treat an animal, god, or object as if it is human in appearance, character, or behaviour’ (Cambridge Dictionary, nd). Anthropomorphisation can be seen as both a positive and a negative practice in the teaching and learning of a school subject (Salles et al., 2020).

From a positive viewpoint, using metaphors to make some aspects of AI systems appear human-like may initially help to demystify the complex technology for students with poor domain knowledge (Tang and Hammer, 2024). The introduction of anthropomorphisation through chatbots is expected to encourage people to use such systems, particularly certain groups such as those at risk of social exclusion, those with an interest in innovation and extroverts (Alabed et al., 2022).

However, anthropomorphisation may lead to incorrect mental models of how AI works, as the technology is humanised, black-boxed and oversimplified (Tedre, Toivonen et al., 2021). In some research, young children have been found to view robots as peers rather than devices, overestimating technology capabilities or seeing them as less-smart ‘people’ (Druga and Ko, 2021). Relationship-forming can also lead to serious risks of unintended influence or purposeful manipulation (Williams et al., 2022).

A further impact of attributing human-like characteristics to AI products is that students have been found to delegate the responsibility of the output to the AI agent rather than to the human system owner or developer or to the tool user (Salles et al., 2020). How anthropomorphised AI agents are portrayed to the user is also important. For example, AI agents have been predominantly ‘portrayed’ as female, potentially baking in female objectification and exacerbating gender power imbalances (Borau, 2024).

However, by engaging students to learn about AI, they can become more sceptical about the technology and recognise that humans are responsible for AI tool design and use, and must check output accuracy and ensure that bias is not perpetuated (Druga and Ko, 2021; Tedre, Toivonen et al., 2021).

Careful use of language and images in teaching and learning can avoid or reduce anthropomorphisation. For example, illustrations that depict devices with human faces can be replaced with more technical images. Vocabulary associated human behaviour (e.g. see, look, recognise, create) can be replaced with system-type words (e.g. detect, input, pattern-match, generate) both by teachers and in resource design. For example, guidance for language use in AI education resource design, such as that in Table 1, can be developed and implemented.

Table 1: An example of guidance that can be used to support the design of AI literacy resources avoiding anthropomorphised language, as used in the Experience AI resources (Raspberry Pi Foundation, co-developed with Google DeepMind, 2023)

Data-driven rather than rule-based problem-solving

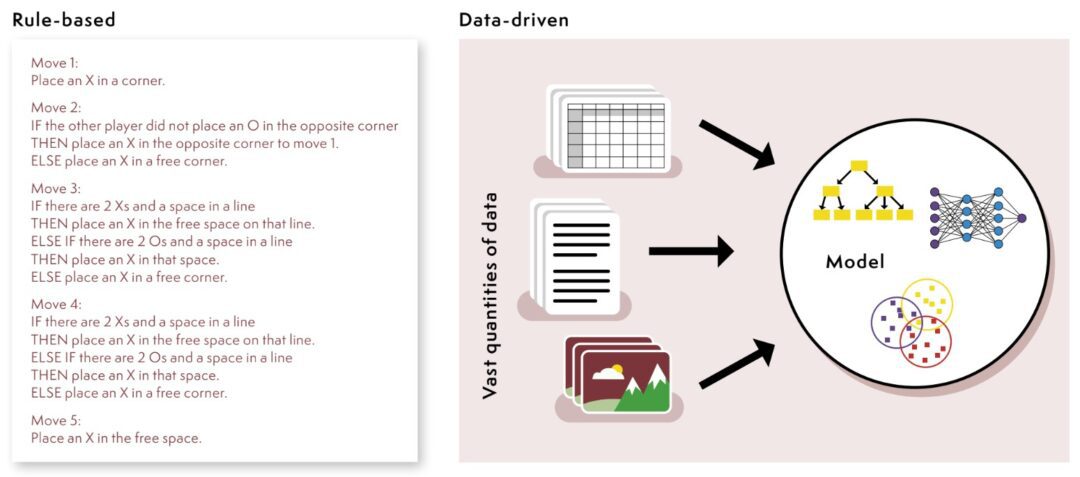

A specific term is needed to help us to talk about new AI technology: data driven. This term has no anthropomorphic connotation but neatly ring-fences those AI technologies trained on data (Tedre, Denning et al., 2021). Generative AI and machine learning systems are data-driven technologies that process vast quantities of data to generate a model that can then be used to output new content or predictions. Figure 1 shows two figures used with students to explain the difference between our traditional way of teaching programming through rule-based problem-solving and the new data-driven approach.

Figure 1: An example of how the concept of data-driven problem-solving can be explained to students, as used in the Experience AI resources (Raspberry Pi Foundation, co-developed with Google DeepMind, 2023)

In the first image on the left, a set of rules (an algorithm) is shown for winning at noughts and crosses, which students could implement using a programming language such as Scratch or Python. The second image shows how different types of data, usually vast quantities, are used to create models, such as neural networks, which are then used as the basis for data-driven systems. These images are examples of how this key concept of data-driven problem-solving can be introduced to students. Data-driven systems do not work in the same way as rule-based systems; with data-driven systems, we must deal with uncertainty, probability and a lack of explainability. Teachers and students need to understand the huge shift in the fundamentals of how some systems are now built, and how we must think when being critical consumers, agentic users or accountable creators of generative AI technology.

Computational Thinking (CT) 2.0

Many of the day-to-day online tools that we use, such as search engines, combine rule-based and data-driven elements. But the two types of technology are very different, and we need a new way in which to think about the latter so that we can compare the two.

In thinking about traditional rule-based programming, there is the idea of Computational Thinking (CT), which, since the popularisation of data-driven technologies, has been coined as CT1.0 (Tedre, Denning et al., 2021). For data-driven technology, we need a new computational thinking approach: CT2.0 (Tedre, Denning et al., 2021). This new way of thinking is not just for computer scientists; it is useful for anyone who is now a user of any software with a data-driven component. In CT1.0, we are interested in algorithms that are defined by people and implemented as readable programs. We can explain these programs, debug them and trace each step as it executes. When we run a CT1.0 program with the same inputs, it will output the same output on any day, in any place and when run by anyone; it is deterministic. But when we run a CT2.0 program, a data-driven product, the output is very likely to be different. You can ask a chatbot the same question twice and get quite different answers. In CT2.0, we can’t trace the logic of the program. Vast amounts of data have been used to train a complex model that is not explainable (yet). In CT2.0, we need to accept that sometimes the output may be incorrect, to beware of bias and to accept uncertainty from the output of computers. This paradigm shift not only manifests in the technical aspects of a system, but it is also evident in the social and ethical impact of a system. Educators, resource developers and researchers need ways in which to start to identify the different aspects of data-driven (or CT2.0) systems.

SEAME framework

A framework, SEAME, has been developed to help to teach and learn about AI (Figure 2). The framework was developed in response to the need to provide professional development to distinguish between the very technical explanations and activities provided, and those targeted at learning about the social implications (Waite and Curzon, 2018). The framework has been used to review teaching resources and research activities that teach about AI (Waite et al., 2023) and categorise students’ emerging conceptions of AI (Whyte et al., 2024). Teachers can also use the framework to help them to start to unpick this complex topic of AI teaching and learning, as they can focus on just one level at a time.

Figure 2: The SEAME framework (Waite et al., 2023) and an example of its classroom resource use (Raspberry Pi Foundation, co-developed with Google DeepMind, 2023)

The framework has four levels: social and ethical, application, model and engine (Figure 2a). An example of a social and ethical learning objective would be ‘knows about the idea of bias in AI systems and can say how this impacts their lives’. An application-level objective would be ‘can identify everyday examples of AI technology’. A model-level objective would be ‘can train a simple classification model’, and an engine-level would be ‘can explain in simple terms how a neural network works’. Some learning activities will span more than one level, and as students progress, it is expected that they will become more able to move between levels. Importantly, the SEAME levels do not mandate the learning order. Rather, the levels provide a simple way for teachers, researchers and resource developers to start to talk about AI teaching and learning. For example, learning objectives can be mapped to SEAME to help to balance learning about the human-centred vs more technical aspects of data-driven systems (Figure 2b).

Conclusion

In summary, more research is needed to find out what should be taught about AI in schools and how. However, there is emerging research evidence to give some starting points to help us to develop teaching resources and teacher professional development and, most importantly, practice for classrooms. Without students forming accurate mental models of any technology that infuses their lives, they will be disadvantaged, as they will not appreciate the risks or potential opportunities of such technology. Data-driven functionality works entirely differently from our old rule-based approach, and students must understand the new risks and opportunities. As you embark on your journey of teaching about AI technology, please consider how you can avoid anthropomorphisation, introduce the idea of a data-driven approach to problem-solving, incorporate CT2.0, and consider which level of the SEAME framework you are teaching. These ideas may help you as you start to equip your pupils with the competencies that they need to keep safe and effectively live in this new data-driven world.

The examples of AI use and specific tools in this article are for context only. They do not imply endorsement or recommendation of any particular tool or approach by the Department for EducationThe ministerial department responsible for children’s services and education in England or the Chartered College of Teaching and any views stated are those of the individual. Any use of AI also needs to be carefully planned, and what is appropriate in one setting may not be elsewhere. You should always follow the DfE’s Generative AI In Education policy position and product safety expectations in addition to aligning any AI use with the DfE’s latest Keeping Children Safe in Education guidance. You can also find teacher and leader toolkits on gov.uk .